Kindly fill up the following to try out our sandbox experience. We will get back to you at the earliest.

How to Calculate the ROI of Data Observability

Learn to measure the ROI of Data Observability to ensure your investments in data management pay off through improved data quality and trust.

Do you know a data observability tool can save a company up to $150,000 yearly? This is by making analytics dashboard more accurate. Investing in data observability gives both direct cost savings and intangible benefits. It helps in quickly fixing data issues and setting up stronger data quality.

Stakeholders must find a clear way to calculate data observability's ROI. They should look at all parts of investing in better data management. This includes fixing specific problems, understanding costs linked to poor data, and setting up plans to solve data quality issues.

Using Decube's platform can be a big help in this. It gives real-time insights and a single truth source. This helps your organization spot and fix data errors early, which boosts overall system operation. It could save between $20,000 and $70,000 yearly with a ROI of 25% to 87.5%. Understanding and carefully calculating your data observability ROI is key to showing its ongoing value.

Key Takeaways

- The potential savings from enhancing the analytics dashboard accuracy with data observability could reach $150,000 per year.

- Companies can expect ROI percentages from data observability tools to range between 25% and 87.5%.

- Cost savings for addressing issues like duplicate new user orders and improving fraud detection are significant, with a potential $100,000 savings per issue per year.

- Calculating ROI for data observability involves direct cost analysis, such as implementation costs, and less tangible benefits like improved decision-making capabilities.

- Investing in data observability tools like Decube offers real-time insights and ensures enhanced data quality, which is crucial for business operations.

Introduction

Today, keeping data systems reliable and trustworthy is key for many companies. With decube's data observability services, organizations can solve data problems quickly. This leads to using data that is top-notch for making decisions. It helps manage data well throughout the company, preventing money loss and cutting costs.

Importance of data observability in modern enterprises

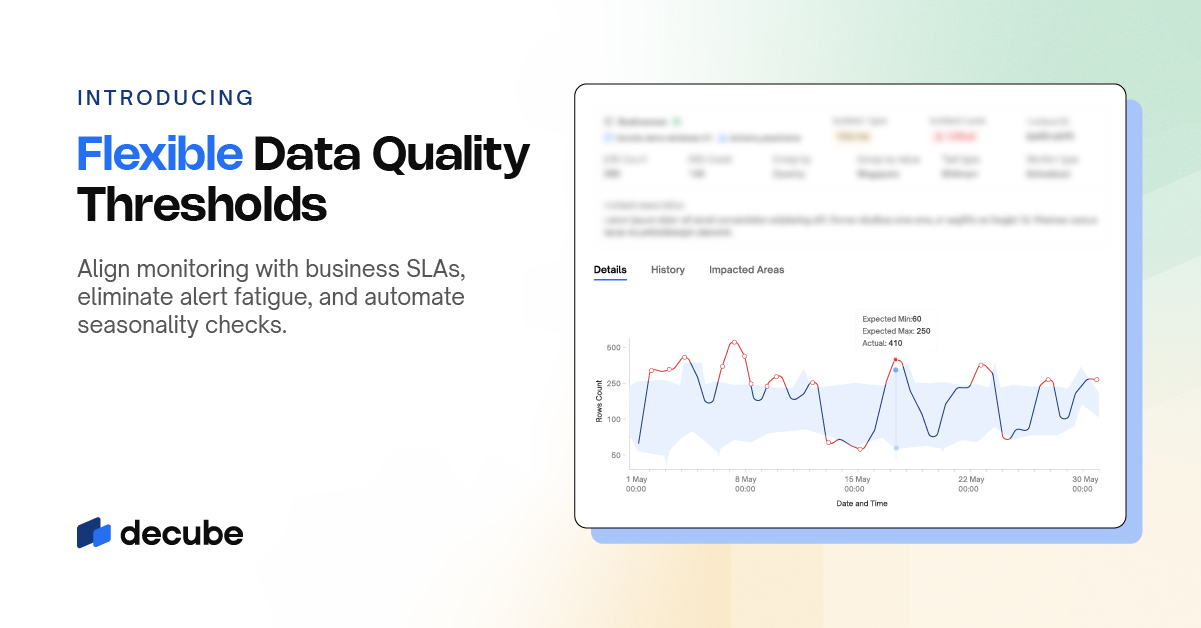

Data observability is extremely important in today's business world. It allows for a real-time look into complex data structures. This way, businesses can spot and fix issues in their data with ease. They can ensure their data is always fresh, right, and dependable for smart choices.

Tools such as decube's keep data quality high by watching over its accuracy and more. This kind of watch means there are fewer unexpected data problems. It boosts trust in data and makes businesses run smoother.

Overview of ROI (Return on Investment) in the context of data observability

Finding the ROI in data observability spending is crucial. It shows how well businesses are using their data investments. A method like CI/CD helps measure savings and productivity boosts clearly.

ROIs reflect money saved from fixing big data issues. Knowing the costs of these issues helps businesses spend wisely on data management. Case studies highlight the benefits of using data tools, like improved efficiency and lower costs.

Decube's innovative data tools show how businesses save money by optimizing their data. This detailed look at the benefits makes a strong case for investing in better data use. It promises better productivity, lower costs, and improved business.

Understanding ROI and Data Observability

Understanding the ROI of data observability is crucial. To do this, we need to explore the importance of ROI in monitoring data. We'll look at how ROI measures profit and compare it to the complex details of data observability.

Definition of ROI

ROI stands for Return on Investment. It shows how efficient and profitable an investment is by comparing the returns to the costs involved. Within data observability, ROI is key. It justifies the spending on monitoring data quality, watching over data pipelines, and using other tools. Figuring out ROI includes looking at the number of engineers needed and their costs.

Components of Data Observability

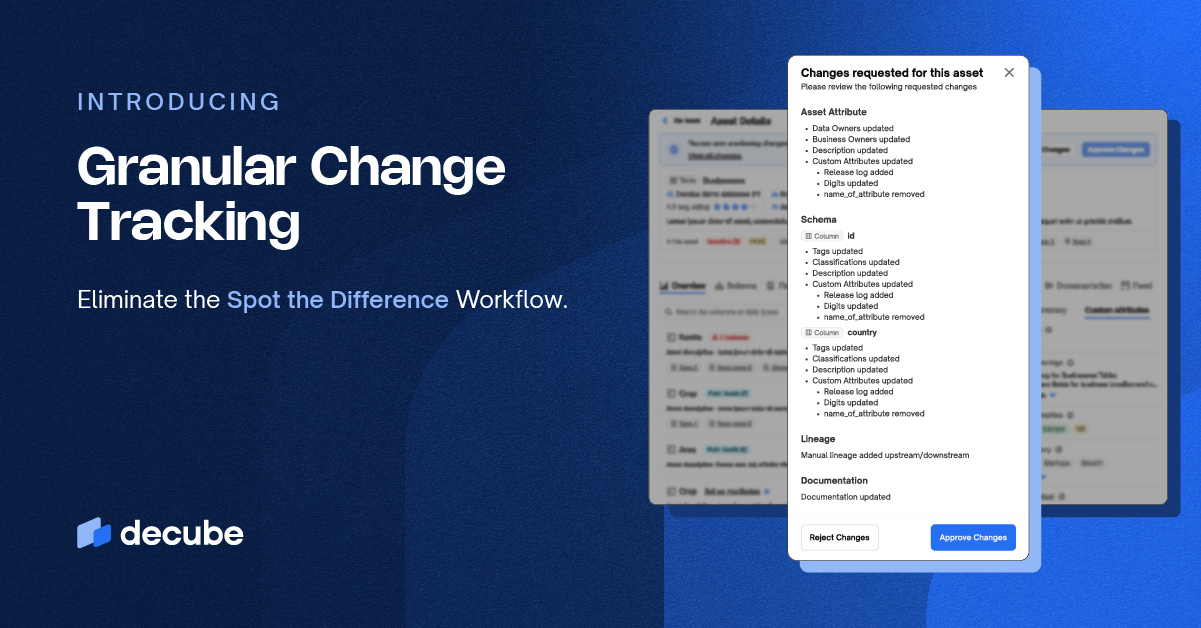

Data observability has many parts that help make sure data is reliable. These include watching data quality and tracking data problems. It also means tracking where data comes from and setting strong data rules to keep it accurate. Setting up all these elements takes time, technical solutions, and changes to how things are done. A good plan for data observability can solve issues fast. This keeps data moving smoothly through its pipelines.

Importance of Measuring ROI for Data Observability Initiatives

Measuring ROI in data observability shows the clear benefits and backs up the spending in this sector. It proves to data teams that keeping an eye on data means better quality, more earnings, and cutting costs. To give an example, figuring out ROI looks at how much money is saved when checking data and storing old data. Also, solving problems quickly and making better choices with data can bring real gains and improve how a company works.

https://youtube.com/watch?v=irjfpOdguCo

Decube's platform on Data Observability is a great example. It shows that putting money into monitoring data can pay off big by increasing data quality and reliability. By monitoring data pipelines well and catching issues early, companies can control their data use better. This way, they see big improvements and gains in what they do.

Key Benefits of Data Observability

Data observability makes data systems run better which is great for business. It helps keep data true and operations smooth, which brings back more than you put in (ROI).

Improved data quality and reliability

Using platforms like decube, data becomes more reliable and trusted. It's crucial for safekeeping in businesses like Uber, where losing data can mean big money lost. With strong observation, these dangers reduce and data stays dependable.

Reduced downtime and faster issue resolution

With data observability, downtime shrinks and fixing data problems gets quick. This keeps apps smooth, making customers and money flow easier. Keeping an eye on data saves companies from big costs of bad data.

Enhanced decision-making capabilities

Better data, thanks to observing it closely, helps companies decide smarter. Seeing the big picture in data and how it links together aids in planning for the future. This way, companies can make the most of their data and work smarter because of it.

Cost savings from proactive data management

Looking ahead with data means not many sudden fixes or data leaks. By tackling problems beforehand, businesses save big. They avoid spending extra on things like repeated user orders or having too much stock left over. It shows that being careful with data saves a lot of money, and it's key to have strong data observation strategies in place.

Identifying Metrics for Calculating ROI

Choosing the right metrics to measure ROI is key in showing the benefits of data observability tools. An organized method is necessary to evaluate cost savings, boosts in productivity, and overall business effects.

Direct Cost Savings

It's important to look at how much money you save directly when considering ROI. With tools like Decube's Data Observability, companies can cut down on making their own solutions. This saves money on engineering costs for creating and running these solutions. By using a formula that includes costs for engineers and the number needed, the potential savings are clear. Using these tools can also cut down on the costs of your tech setup by making data handling, storage, and hosting more efficient.

Productivity Improvements

Improving productivity is also crucial for ROI. A key metric is looking at how quickly you solve problems with data. By speeding up these solutions, you lower the costs of running things. It's also vital to see the savings from finding and fixing data quality problems quickly. These changes make your data management more effective.

Business Impact

Business impact goes beyond money to cover better decisions and products from your data. You can measure the boost in ROI from trusted data by looking at the quality improvement and how it affects revenue. Better decisions increase how much you make from your data and get products out faster, which keeps your customers happy. This combines with savings and better operations to show the full benefit of these tools.

Using these measures, companies can make a strong case for using tools like Decube's. These metrics show the savings and improved operations that come from better data management.

Calculating the Costs of Implementing Data Observability

Planning for data observability means you must look at the setup and ongoing costs. This helps grasp the full financial picture.

Initial Setup Costs

Setting up a data observability platform, like decube, has steep initial costs. This includes buying tools, deployment, and customization. It also involves hiring engineers and any upfront infrastructure setup costs.

Infrastructural support for these tools may require further spending on data analytics. This step is critical for a smooth integration with your existing data systems.

Ongoing Costs

After setup, there are continual costs to run and improve the platform. These include data warehouse and cloud hosting costs. Also, don't forget about staff training and change management.

Keeping data quality high is key. Otherwise, IBM warns that the US could face a $3.1 trillion loss annually from bad data. Plus, there are ongoing operational and compliance costs to consider.

The initial and ongoing costs show how vital a sound investment strategy is. It balances benefits and real costs of data observability. Thorough cost calculations help businesses prepare and gauge the expected return on their investments.

Building a ROI Calculation Model

Understanding the financial benefits of data observability is key. The first steps are to collect data, list the benefits, calculate the net gains, and find the overall ROI.

Gathering baseline data

The start is getting metrics about your data's current state. For example, it's vital to know how long it takes to find and fix data issues. This affects business choices and actions. Learning from past data incidents, you can improve a lot.

Another key metric is how long it takes to fix data problems. If you use the right tools to find why these problems happen, you can solve them faster. Knowing the cost of not fixing data problems is also important. It can be more than $600,000 a year.

Estimating benefits

After setting up your data systems, think about the good things it brings. Look at the Mars Climate Orbiter's $125 million mistake. Or IBM's $3.1 trillion annual loss from bad data. By avoiding these problems, a company can save a lot of money. Tools like decube can predict and prevent data issues, thus making a company more efficient.

Calculating net gains

Net gains are the financial benefits after getting better data systems. For instance, a SaaS company may lose $45,000 in just three days if data isn't right. Think about the money saved, and the better decisions made. This shows the importance of investing more and growing your team.

Determining the ROI percentage

Finding the ROI percentage is the final part. This means comparing the benefits with the total investment. A model failure might cost an online shop $50,000 in five days. Showing the high returns on investment for data improvement is convincing. It shows the value of using tools like decube to improve metrics and save a lot of money.

Real-World Examples and Case Studies

Looking at how data observability is used in the real world shows us its value and potential. We will study how big businesses and mid-sized companies benefit. This will help us understand the impact of data observability tools on their operations and finances.

Case study 1: Large enterprise implementation

After Contentsquare started using a data observability platform, they found issues 17% faster. This change shows how these tools can quickly make a big difference. BlaBlaCar cut their time to fix issues in half with these tools, showing they are essential for big companies.

Case study 2: Mid-sized company success story

Choozle saw their data downtime drop by 80% with data observability. This success story highlights the power of these tools for mid-sized companies. These tools help in many ways, like predicting when data problems will happen. They also shrink the time it takes to fix these issues - for Choozle, from a day to just an hour. This makes handling data more efficient and supports making money and saving costs.

Lessons learned from different industries

Decube's platform has shown that businesses need reliable and available data. With data observability, working with data becomes smoother and faster. It helps keep the data quality high and finds and fixes mistakes quickly. This is essential in many fields, proving its worth. Tools like decube support companies in moving to new tech, offering a full data observability solution for various industries.

Best Practices for Maximizing ROI

Maximizing your ROI from data observability needs regular measuring and smart strategies. Using tools like decube's Data Observability can boost your ROI greatly. It has automated tools for checking data quality and tracing its path.

When figuring out ROI, you can use formulas like Lift / investment = ROI. Also, the formula (Data product value – data downtime) / data investment = ROI helps. These methods make sure every spending leads to real business benefits.

FAQ

What is the importance of data observability in modern enterprises?

Data observability is crucial for keeping data systems trustworthy. It allows companies to spot and fix data problems quickly. This leads to reliable data for making smart decisions and better overall operations.

How do you define ROI in the context of data observability?

ROI shows how profitable it is to invest in tools for monitoring data. It looks at the financial benefits compared to the costs. These can be savings or less measurable things like making better choices and lower risks.

What are the primary components of data observability?

It includes many key parts. Some are checking data quality, keeping an eye on data moving through systems, tracking where data comes from, spotting unusual data, and measuring how reliable data is.

Why is it crucial to measure ROI for data observability initiatives?

Measuring ROI makes sure investments in data monitoring pay off. It shows the value in better business results, like earning more, saving costs, and working more efficiently.

What are the key benefits of data observability?

Its advantages are clearer, more reliable data, less downtime, quick fixing of issues, better decision-making, and saving money by managing data well ahead of time.

How do you identify metrics for calculating ROI in data observability?

For ROI, look at how much money is saved by not using your own solutions. Also, see if things get done quicker. And think about how it affects big decisions and keeping customers happy.

What are the costs involved in implementing data observability?

Setting up includes buying and installing monitoring tools. After, you have costs like upkeep, teaching your team, and maybe changing how you do some things.

How do you build a ROI calculation model for data observability?

To create a ROI model, first see how things are before the change. Then, figure out the clear and not-so-clear benefits gained. Calculate the net profit. And find out the ROI to see if it's a good investment.

Can you provide examples of real-world implementations of data observability and their ROI?

Stories from big and medium-sized businesses show the great return on investment from data monitoring tools. They improve data, cut back downtime, make customers happier, and save money.

What are some best practices for maximizing ROI in data observability?

Helpful tips are keeping track of ROI well, building trust in handling data, always checking and updating how you monitor data, and using machine learning to keep data quality high.

_For%20light%20backgrounds.svg)